Heuristics and Biases – The Science Of Decision Making

This article was originally published in June 2015 Vol 32 (2) edition of Business Information Review. References and links were checked and updated on 8th August 2019.

Abstract

A heuristic is a word from the Greek meaning “to discover.” It is an approach to problem solving that takes one’s personal experience into account. Heuristics provide strategies to scrutinise a limited number of signals and/or alternative choices in decision-making. Heuristics diminish the work of retrieving and storing information in memory; streamlining the decision making process by reducing the amount of integrated information necessary in making the choice or passing judgment. However, while heuristics can speed up our problem and decision-making process, they can introduce errors and biased judgments. This paper looks at commonly used heuristics and their human psychology origins. Understanding how heuristics work can give us better insight into our personal biases and influences, and (perhaps) lead to better problem solving and decision making.

Introduction

We make decisions and judgments every day – if we can trust someone, if we should do something (or not), which route to take, how to respond to someone’s question, the list is endless. If we carefully considered and analysed every possible outcome of these decisions and judgments, we would never get anything done!

Thankfully, our mind makes things easier for us by using efficient thinking strategies known as heuristics. A heuristic is a mental shortcut that helps us make decisions and judgments quickly without having to spend a lot of time researching and analysing information

Heuristics play important roles in both problem-solving and decision-making.

When we are trying to solve a problem or make a decision, we often turn to these mental shortcuts when we need a quick solution.

For example, when walking down the street, you see a workman hauling up a pallet of bricks on a pulley. Without a break in stride, you would likely choose to walk around that area instead of directly underneath the bricks. Your intuition would tell you that walking under the bricks could be dangerous, so you make a snap judgment to walk around the danger zone. You would probably not stop and assess the entire situation or calculate the probability of the bricks falling on you or your chances of survival if that happened. You would use a heuristic to make the decision quickly and without using much mental effort

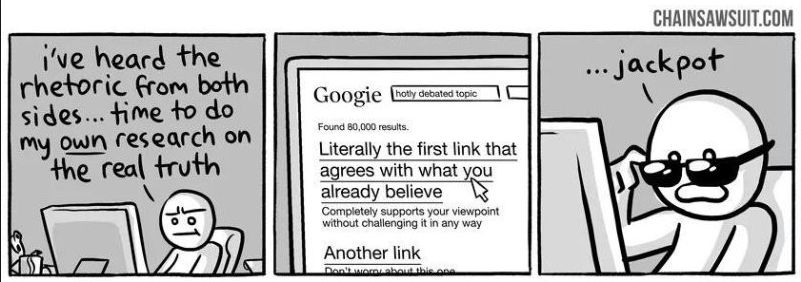

However, while heuristics can speed up our problem and decision-making process, they can introduce errors and biased judgments. Just because something has worked in the past does not mean that it will work again, and relying on an existing heuristic can make it difficult to see alternative solutions or come up with new ideas.

As a result of research and theorising, cognitive psychologists have outlined a host of heuristics people use in decision-making. Heuristics range from general to very specific and serve various functions. The “price heuristic”, in which people judge higher priced items to have higher quality than lower priced things, is specific to consumer patterns; while the “outrage heuristic”, in which people consider how contemptible a crime is when deciding on the punishment (Shah, & Oppenheimer, 2008). According to Shah and Oppenheimer three important heuristics are the representative, availability, and anchoring and adjustment heuristics. These and other heuristics are discussed in the next section.

Heuristics: The Psychology and Science

System 1 and System 2

“Dual process” theories of cognition (DPT) have been popularised by Daniel Kahneman, a Nobel Prize winning behavioural economist, who expounds the theory of “System 1” and “System 2”. System 1 is fast, automatic, effortless, associative and often emotionally charged, and thus difficult to control or modify; and “System 2,” which is slower, serial, effortful, and deliberately controlled, and thus relatively flexible and potentially rule-governed.

System 1 is essentially an autopilot system in which we do things easier and through repetition. It filters out things in the environment that are irrelevant at that moment; it has high efficiency. It is based on the idea that neurons that fire together, wire together. It primes an idea so that one idea is more easily activated (wakening of associations). System 1 has a bias towards making thinking cheap and enables one to deal with information overload.

System 2 corrects and adjusts the perceptual blindness associated with system 1. It allows flexibility, giving nuance and precision more importance. Basically, system 1 is more associative and intuitive while system 2 is analytical and deliberative.

Availability Heuristic

This is the tendency to judge the frequency or likelihood of an event by the ease with which relevant instances come to mind. It operates on the assumption that if something can be recalled, it must be important or more important than alternative solutions that are not as readily recalled. A decision maker relies upon knowledge that is readily available rather than examine other alternatives or procedures; as a result, individuals tend to weigh their judgments toward more recent information, making new opinions biased toward that latest news.

In other words, the easier it is to recall the consequences of something, the greater those consequences are often perceived to be.

This is inaccurate because our recall of facts and events is distorted by the vividness of information, the number of repetitions we are exposed to through advertisements on radio and television, and their subsequent familiarity.

Here is an example: a survey conducted in 2010 in the U.S. examined the most feared ways to die. Six of the top ten results were: terrorist attacks, shark attacks, airplane crashes, murders, natural disasters, and falling. What do these six results have in common? Although they are the most “feared”, they are also some of the most unlikely causes of death in the United States.

- Terrorist attack: Your chances of dying from such an attack is 1 in 9.3 million, which is slightly greater than your risk of dying in an avalanche. Front-page news of terrorist incidents worldwide exacerbates the availability heuristic.

- Shark attacks: Even if you live near the ocean, your chances of being attacked by a shark are 1 in 11.5 million. In the last 500 years only 1,909 confirmed shark attacks occurred worldwide; of the 737 that happened in the United States, only 38 people died. The chances of being killed by a shark are much slimmer than that of a shark attack: 1 in 264.1 million.

- Airplane crashes: Your chances of being involved in a fatal airline accident are once every 19,000 years.

- Being murdered: “Worldwide, one person is murdered every 60 seconds!” “Every year, ___ people are murdered.” However, in the year 2000, about 520,000 people were murdered, compared with 6 million who died of cancer that same year.

- Natural disaster: The chances of dying in a natural disaster (ex. hurricane, tornado, flood, etc.), is 1 in 3,357.

- Falling: In 2001, 12,000 people aged 65+ died from a fall. However, only 80 people die from falling from a tall height annually.

The actual annual leading causes of death in the U.S., the ones that should be feared, are:

- Tobacco usage: 435,000 deaths, 18.1% of total U.S. deaths

- Poor diet/physical inactivity: 400,000 deaths, 16.6%

- Alcohol consumption: 85,000 deaths, 3.5%

- Microbial agents: 75,000

- Toxic agents: 55,000

- Motor vehicle crashes: 43,000

- Incidents involving firearms: 29,000

- Sexual behaviours: 20,000

- Illicit use of drugs: 17,000

Statistically speaking, we will not die in an airplane crash, be eaten by a shark, or fall to our death. Yet we are all much more scared of these unlikely events than we are of diabetes, heart disease, or traffic accidents.

When we’re asked to think of how likely something is, we ignore the statistical probabilities, but ask ourselves a much simpler question: “How easily can I think of an example?” this is how the frequency and probability of events become skewed in our minds: overestimation of subjective probabilities causing overreaction. Dramatic deaths are more memorable and more exposed by the news, so examples from all different countries are constantly shown – intensifying the effects of this heuristic.

Availability provides a mechanism by which occurrences of extreme utility (or disutility) may appear more likely than they actually are.

Anchoring and Adjustment Heuristic

This is the tendency to judge the frequency or likelihood of an event by using a starting point called an anchor and then making adjustments up or down.

When people make quantitative estimates, their estimates may be heavily influenced by previous values of the item. For example, it is not an accident that a used car salesman always starts negotiating with a high price and then works down. The salesman is trying to get the consumer anchored on the high price so that when he offers a lower price, the consumer will estimate that the lower price represents a good value.

This is also a commonly used heuristic in the property market. Sellers may see more value in their homes than is actually there, and ask for a price so high that no buyer is going to be interested. In these situations, the asking price is not a good anchor, but it may still play a role in helping buyers decide how much to offer. When the price is too high or too low, the buyer will, of course, offer something different than what is being asked. But the question here is, how much different?

Let’s say that there is a house worth something between £450K and £500K. In one case, the owners ask for £425K in the hope that they will get lots of interest and people will outbid each other. They might get some offers higher than the asking price, but how high will they actually be? In the other case, the owners ask for £525K, perhaps because they bought it when the market was higher and can’t bear to sell for less. This asking price is clearly more than the house is worth, and buyers will offer below asking, but how much below? In both of these cases, people are still likely to use the initial asking price as an anchor and adjust their offers up or down from this price accordingly. In both cases, the house is worth the same and people should end up offering somewhere around £475K, but if people do apply the anchoring and adjustment heuristic, in the end, the higher priced house might ultimately go for more.

The key point about the anchoring heuristic is that different starting points yield different estimates, which are biased toward the initial value or number.

Representativeness Heuristic

The Representative Heuristics is a mental shortcut that helps us make a decision by comparing information to our mental prototypes or stereotypes. It is the tendency to judge the frequency or likelihood of an event by the extent to which it resembles the typical case. Heavy reliance on this leads people to ignore other factors that heavily influence the actual frequencies and likelihoods, such as rules of chance, independence, and norms.

For an illustration of judgment by representativeness, consider an individual who has been described by a friend as follows: “Steve is very shy and withdrawn, invariably helpful, but with little interested in people, or in the world of reality. A meek and tidy soul, he has a need for order and structure, and a passion for detail.” How do people assess the probability that Steve in engaged in a particular occupation form a list of possibilities (for example, farmer, salesman, airline pilot, librarian, or physician)? … In the representativeness heuristic, the probability that Steve is a librarian, for example, is assessed by the degree to which his is representative of, or similar to, the stereotype of a librarian.

Another Example is the so-called gambler’s fallacy, the belief that runs in good and bad luck can occur. For example, if a coin toss turns up ‘heads’ multiple times in a row, many people think that ‘tails’ is a more likely occurrence in the next toss to “even things out”, even though each toss is an independent event not connected to the toss before or after it

This heuristic, like others, saves us time and energy. We make a snap decision and assumption without thinking very much. Unfortunately, many examples of the representativeness heuristic involve succumbing to stereotypes or relying on information patterns, as in the sequence of heads and tails.

Simulation Heuristic

This is the tendency to judge the frequency or likelihood of an event by the ease with which you can imagine (or mentally simulate) an event. You miss-predict the possibility of this happening because of the ease with which we can imagine it. It differs from the availability heuristic because of how previous experience is involved.

For example, would we be more upset at missing our train home by 5 minutes or by 45 minutes?

There is NO difference between the two outcomes, so we might statistically choose that roughly half the people would choose each option. The reality is different. People are much more likely to state they would be upset by missing the train by 5 minutes than by 45 minutes.

This is the simulation heuristic in action. We can visualise and imagine what it would be like to just miss the train. We have a mental script that shows an individual frantically running down the platform and barely missing the train. The scenario is easy to imagine. But we don’t have a mental script for what it is like to miss the train by 45 minutes. We can’t visualise this happening.

Another example is if someone missed winning the lottery by just one number. It is likely that they would be more upset than someone who didn’t have any of the winning numbers. The simulation heuristic explains this discrepancy by suggesting that it would be very easy for the person who “just missed” winning to imagine events being different based on the last number matching their number. As a result, the possibility of them winning seems much more likely and thus the fact that they lost much more disappointing.

As such we make choices based on the ease by which we can imagine examples of scenarios. This mental short cut is the basis of the simulation heuristic

Peak-and-end Heuristic

The peak end rule is a heuristic in which we judge our past experiences almost entirely on how they were at their peak (whether pleasant or unpleasant) and how they ended1. When we do this we discard virtually all other information, including net pleasantness or unpleasantness and how long the experience lasted. We only remember certain details of a whole experience; the peak and the end. Whether most parts of the experience were acceptable is without influence on the user’s perception of the experience as a whole.

An experiment in the application of pain was used to demonstrate this heuristic. The series 2-5-8 and 2-5-8-5 refer to periods of where pain has been applied every 5 minutes to volunteers. Rationally, adding 5 extra minutes of pain will only increase total discomfort, although the experiment showed the longer period of pain (20 minutes), but with a period of diminished discomfort in the end, was rated less discomforting than the shorter period of pain (15 minutes), but with an increased discomfort in the end.

Designing for the peak-end rule is another way of not focusing on what is less important, but about focusing on what brings the most value to the user’s experience. The peak-and-end heuristic is known for how we misremember the past.

Base-Rate Heuristic

The base-rate heuristic is a mental shortcut that helps us to make a decision based on probability. For an example, imagine you live in a big city and hear an animal howling around midnight. You would probably assume it was just a dog, as wolves aren’t likely to be found in the city. Statistically, a wolf howling in the city would be very improbable.

Base rate neglect is the tendency for people to mistakenly judge the likelihood of a situation by not taking into account all relevant data.

Here is an example. Let’s say you have just met with your doctor who has informed you that you have tested positive for a typically fatal disease. To make things worse, this test is accurate 95% of the time. Most people would sadly conclude that there was a 95% chance that they have the disease, a virtual death sentence. In fact, one would need to know the prevalence of the disease in the general population to determine the actual likelihood that the test was correct. If the prevalence of the disease is 1 in 1000, the likelihood that you actually have the disease (based on this test) is less than 2%.

When this exact problem was given to students at Harvard Medical School, almost half the students computed the likelihood that the patient had the disease was 95%. The average response was 56%. Similar studies with practicing physicians have had comparable findings; medical experts are prone to base rate neglect.

Base rate neglect is a fundamental flaw in human reasoning, resulting from our innate weakness in analysing complex probability problems. It is an example of where our intuitive judgements or instincts can lead us astray. Having an understanding of base rate neglect, along with the supporting math, can help us arrive at more accurate judgements, conclusions and decisions.

Solution for Medical Test Problem

Let’s assume a model where 100,000 people are tested for the diseased. Since the disease affects 1 in 1000 people, we would expect 100 people to have the disease. That means that 99,900 people would not have the disease. Of those 99,900, 4,995 people would receive a false positive diagnosis. That compares to only 95 people receiving a correct positive diagnosis. Therefore, the probability upon receiving a positive test that one actually has the disease is 1.9% (95/4,995). The table below illustrates the calculation:

| Have Disease | ||||

| Yes | No | Probability | ||

| Test Results | Positive | 95 | 4,995 | 1.90% |

| Negative | 5 | 94,905 | 0.01% | |

Affect-as-information Heuristic.

Have you ever wondered why you feel inclined to go with your gut feelings when making a decision? This isn’t a glitch in your reasoning; in fact, it’s a phenomenon known as the affect heuristic, a mental shortcut that helps you to make decisions quickly by bringing your emotional response (or “affect”) into play. The affect heuristic is a swift, involuntary response to a stimulus that speeds up the time it takes to process information. Researchers have found that if we have pleasant feelings about something, we see the benefits as high and the risks as low, and vice versa. As such, the affect heuristic behaves as a first and fast response mechanism in decision-making

Heuristic processing assumes that affective processing, or emotional processing, occurs outside our awareness, with people simply making sense of their emotional reactions as they happen. As situations become more complicated and unanticipated, mood becomes more influential in driving evaluations and responses. This process is known as the “affect-as-information” (AIM) mechanism. A key assertion of AIM is that the effects of mood tend to be exacerbated in complex situations that demand substantial cognitive processing.

People in a positive mood will interpret the environment as benign. Hence, they process information globally and heuristically. In contrast, those in a negative mood will interpret the environment as problematic and they will process information locally and diagnostically. People tend to use Affect-as-Information heuristic when the evaluation objective is affective in nature, when information is too complex, or when there are time constraints (Clore et al. 1994).

In simple terms, the affect heuristic works as follows: imagine a child who lives with a cuddly collection of well-mannered dogs who comes across a strange dog. Also imagine a second child who was recently bitten severely by the neighbour’s cocker spaniel. The former child will associate dogs with pleasant feelings, and will unconsciously judge the risk in saying hello to the new dog as low and the benefit as high. The latter child will associate dogs with fear and pain, and will judge the risk in getting close to the strange dog as high and the benefit as low. Without thinking about it, the former will probably approach the dog in question, while the latter will not. Both children display the affect heuristic in action—an involuntary emotional response that influences decision-making.

Other Heuristics

The previous sections described the more commonly used heuristics. The following heuristics are also noted, but without detailed descriptions or examples. Included here for completeness.

- “Consistency heuristic” is a heuristic where a person responds to a situation in way that allows them to remain consistent.

- “Educated guess” is a heuristic that allows a person to reach a conclusion without exhaustive research. With an educated guess a person considers what they have observed in the past, and applies that history to a situation where a more definite answer has not yet been decided.

- “Absurdity heuristic” is an approach to a situation that is very atypical and unlikely – in other words, a situation that is absurd. This particular heuristic is applied when a claim or a belief seems silly, or seems to defy common sense.

- “Common sense” is a heuristic that is applied to a problem based on an individual’s observation of a situation. It is a practical and prudent approach that is applied to a decision where the right and wrong answers seem relatively clear cut.

- “Contagion heuristic” causes an individual to avoid something that is thought to be bad or contaminated. For example, when eggs are recalled due to a salmonella outbreak, someone might apply this simple solution and decide to avoid eggs altogether to prevent sickness.

- “Working backward” allows a person to solve a problem by assuming that they have already solved it, and working backward in their minds to see how such a solution might have been reached.

- “Familiarity heuristic” allows someone to approach an issue or problem based on the fact that the situation is one with which the individual is familiar, and so one should act the same way they acted in the same situation before.

- “Scarcity heuristic” is used when a particular object becomes rare or scarce. This approach suggests that if something is scarce, then it is more desirable to obtain.

- “Rule of thumb” applies a broad approach to problem solving. It is a simple heuristic that allows an individual to make an approximation without having to do exhaustive research.

Summary and Conclusion

We make hundreds, if not thousands of decisions each day, from what shirt to wear, to which train to catch to work, what to read, who to smile at, or who to ignore. Some decisions are limited to our own actions; some decisions may affect other people, either directly or implicitly. For example, we might make a decision about who to assign to a particular project – which will directly affect that person and their subsequent behaviour. Alternatively, the fact that we did not acknowledge a smile or greeting from someone – even though we saw it – may effect the subsequent behaviours and attitudes of that person (e.g. to stereotype you as indifferent and not to greet you the next time they see you).

Many of these decisions are made intuitively, or to be more precise, using one or more of the heuristics previously described. We don’t consciously choose a heuristic; it presents itself according to the context and environment we populate. The need to process large amounts of information in minimal time will draw upon those heuristics that we’ve previously relied upon. The amazing thing is that even where we haven’t triggered detailed cognitive analysis of all the available data in order to reach what we believe is an evidence-based decision, more often that not it’s the right decision. For example, there are masses of information reaching our senses before we cross the road. The distance and estimated speeds of cars and cyclists, the presence of other people who may or may not be obstructing our progress, etc. The fact that we got to the other side of the road safely justified our decision to cross when we did.

Fortunately, most of us are not called upon to make life-changing decisions, but if we were, we would naturally want to take the time to analyse all of the data available and check and double-check that we’ve interpreted it correctly. But what if that time is not available? For example, the surgeon undertaking a routine operation where the patient suddenly goes into cardiac arrest. Heuristics will enable the surgeon to instantly make – in all probability – the right decisions, e.g. the availability heuristic that enables recall of similar circumstances and the associated decisions. But at the same time, relying on precedent and recall will sometimes trip us up when we come across the unique.

This article merely touches on some of the social psychology research that underpins how we make decisions. Being aware of how we make decisions gives us some insight into the inherent biases we might use – often unconsciously – in making those decisions. In particular, the tendencies towards stereotyping, prejudice and discrimination.

The key point of this article is to raise awareness that while heuristics can speed up our problem and decision-making process, they can also introduce errors and biased judgments. Just because something has worked in the past does not mean that it will work again, and relying on heuristics can make it difficult for us to see alternative solutions or come up with new ideas.

We will always tend towards saving our brains from doing any extra work in cognitively analysing vast amounts of data if we think there is a shortcut to the answer. Fortunately the application of heuristics make this work more often than not, but the consequence of getting it wrong have to be taken into account.

Perhaps heuristics is best summed up by this quote from Daniel Kahneman:

“This is the essence of intuitive heuristics: when faced with a difficult question, we often answer an easier one instead, usually without noticing the substitution.”

Perhaps it would do us all good to ponder, now and again, how we came to a particular decision, and whether, on reflection it was the right one!

References and Further Reading

- Cervone, D., & Peake, P. K. (1986). Anchoring, efficacy, and action: The influence of judgmental heuristics on self-efficacy judgments and behavior. Journal of Personality and Social Psychology, 50, 492-501

- Chapman, G. B., & Bornstein, B. H. (1996). The more you ask for, the more you get: Anchoring in personal injury verdicts. Applied Cognitive Psychology, 10, 519-540

- Chapman, L.J., 1967. “Illusory correlation in observational report”. Journal of Verbal Learning 6: 151–155.

- Chapman, G. B., & Johnson, E. J. (1994). The limits of anchoring. Journal of Behavioral Decision Making, 7, 223-242

- Carroll, J.S., 1978. … an event on expectations for the event: An interpretation in terms of the availability heuristic. Journal of Experimental Social Psychology.

- Clore, G.L., Schwartz, N., and Conway, M. 1994. Affective causes and consequences of social information processing. Handbook of social cognition.

- Epley, N., Keysar, B., Van Boven, L., & Gilovich, T. (2004). Perspective taking as egocentric anchoring and adjustment. Journal of Personality and Social Psychology, 87, 327-339.

- Folkes, V.S., 1988. The Availability Heuristic and Perceived Risk. Journal of Consumer Research.

- Gabrielcik, A. and Fazio, R.H., 1984. Priming and frequency estimation: A strict test of the availability heuristic. Personality and Social Psychology Bulletin.

- Gilovich T., Griffin, D.W., Kahneman, D., 2002. Heuristics and Biases: The Psychology of Intuitive Judgment. Cambridge University Press

- Jacowitz, K. E., & Kahneman, D. (1995). Measures of anchoring in estimation tasks. Personality and Social Psychology Bulletin, 21, 1161-1166.

- Kahneman, D., 2003. Maps of Bounded Rationality: Psychology for Behavioral Economics.

- Katsikopoulos, K.V., 2010. Psychological Heuristics for Making Inferences: Definition, Performance, and the Emerging Theory and Practice. http://www.econ.upf.edu/docs/seminars/katsikopoulos.pdf

- Mussweiler, T., & Strack, F. (1999). Hypothesis-consistent testing and semantic priming in the anchoring paradigm: A selective accessibility model. Journal of Experimental Social Psychology, 35, 136-164.

- Mussweiler, T., & Strack, F. (2000). Numeric judgments under uncertainty: The role of knowledge in anchoring. Journal of Experimental Social Psychology, 36, 495-518.

- Northcraft, G. B., & Neale, M. A. (1987). Experts, amateurs, and real estate: An anchoring-and-adjustment perspective on property pricing decisions. Organizational Behavior and Human Decision Processes, 39, 84-97

- Quizlet – Social Psychology Heuristics https://quizlet.com/7346737/social-psychology-heuristics-flash-cards/

- Schwarz, N., et al., 1991. Ease of retrieval as information: Another look at the availability heuristic. Journal of Personality and Social Psychology.

- Schwarz, N. and Vaughn, L.A., 2002. The availability heuristic revisited: Ease of recall and content of recall as distinct sources of …. Heuristics and biases: The psychology of intuitive judgment.

- Shedler, J. and Manis, M., 1986. Can the availability heuristic explain vividness effects. Journal of Personality and Social Psychology.

- Strack, F., & Mussweiler, T. (1997). Explaining the enigmatic anchoring effect: Mechanisms of selective accessibility. Journal of Personality & Social Psychology, 73, 437-446.

- Tversky, A. and Kahneman, D., 1973. Availability: A heuristic for judging frequency and probability. Cognitive Psychology.

- Waenke, M., Schwarz N., and Bless, H., 1995. The availability heuristic revisited: Experienced ease of retrieval in mundane frequency estimates.Acta Psychologica.

- http://www.theguardian.com/world/2011/sep/05/september-11-road-deaths

- https://diplopi.files.wordpress.com/2014/10/ebola.jpg

- Wansink, B., Kent, R. J., & Hoch, S. J. (1998). An anchoring and adjustment model of purchase quantity decisions. Journal of Marketing Research, 35, 71-81.

- Wegener, D. T., Petty, R. E., Detweiler-Bedell, B. T., Jarvis, W., & Blair G. (2001). Implications of attitude change theories for numerical anchoring: Anchor plausibility and the limits of anchor effectiveness. Journal of Experimental Social Psychology, 37, 62-69.

- Wilson, T. D., Houston, C. E., Etling, K. M., & Brekke, N. (1996). A new look at anchoring effects: Basic anchoring and its antecedents. Journal of Experimental Psychology: General, 125, 387-402.

- Wright, W. F., & Anderson, U. (1989). Effects of situation familiarity and financial incentives on use of the anchoring and adjustment heuristic for probability assessment. Organizational Behavior and Human Decision Processes, 44, 68-82

Helpful Problem Solving Strategies from Peak – Science-backed hacks to push your brain further.

The Ultimate Guide to Problem Solving in the Workplace – Some additional material on cognitive biases, decisions making and problem solving – if you don’t mind the embedded links to furniture products.

Author Biography

Stephen Dale is a freelance community and collaboration ecologist with experience in creating off-line and on-line environments that foster conversations and engagement. He is both an evangelist and practitioner in the use of collaborative technologies and Social Media applications to support personal learning and development, and delivers occasional training and master-classes on the use of social technologies and social networks for improving digital literacies.

2 thoughts on “Heuristics and Biases – The Science Of Decision Making”

Thank you so much for introducing the Heuristic theories with very a well written format and vivid examples! As a quantitative researcher, it’s very fascinating to read your research and help me to respond to a social science reviewer’s comments on my “technical” research manuscript.

Thank you again!